How does ChatGPT work? – Digital currency

Artificial intelligence can undoubtedly be considered the most trending topic of 2023. And among all artificial intelligence products, ChatGPT is highly popular.

We all know ChatGPT for its ability to understand advanced texts and provide correct and accurate answers. However, few people know how this popular artificial intelligence is created and then works.

ChatGPT is a chatbot that uses artificial intelligence technology and allows us to experience conversations similar to everyday conversations with this bot. ChatGPT’s language model can answer various questions and help us do things like writing emails or articles or even coding.

But the question is, how did ChatGPT manage to understand the questions and provide accurate answers? Recently, an author in Towards Data Science has examined this issue in detail Results has shared In this article, we share the translation of these results with you.

Accurate and correct answers of ChatGPT are the result of using advanced technologies and years of research. The technology and how ChatGPT works can be complex; For this reason, in this article we try to check the details of this chatbot in a simple way.

For this purpose, we first introduce large language models. Next, we outline the GPT-3 training mechanism and finally examine the learning with human feedback that has led to the impressive performance of ChatGPT. To learn more about ChatGPT, stay with us until the end of the article.

Getting to know the big language model

The Large Language Model (LLM) is one of the machine learning and artificial intelligence training models that was created to interpret human language. LLM acts as a huge database and a kind of technological infrastructure that can process large amounts of textual data.

Today, with the advancement of technology and computing power, the efficiency of LLMs has become much higher than in the past; Because with the increase of the input data set and parameter space, the capabilities and functions of LLM also increase.

The standard training method for LLMs is to predict the next word in a sequence of words using a long-short-term memory model (LSTM). LSTM can work with sequential data such as text and audio.

In this teaching method, LLM should check the phrases and words before and after and based on the results of this check, fill the empty space of the phrase with the most appropriate word. This process is repeated many times so that the model can generate accurate answers.

The said process is done in the form of Next Token Prediction (NTP) and Masked Linguistic Model (MLM). In both models, the AI must choose the best word to fill in the blank; But the location of the vacancy is different.

Limitations of training with LSTM

Training with LSTM also has limitations. Consider this example:

Ali… reading lessons (enthusiastic / opposed)

If you are asked to fill in the blank with the correct word, you must first know about “Ali”; Because people’s interests are different. So, if you know that Ali is interested in studying, you will choose “enthusiastic”.

However, the model cannot value the words correctly and accurately; Therefore, he may consider the importance of “studying” more than “Ali” in this phrase. Therefore, considering that many people hate studying and doing homework, the model chooses the word “opposed”.

Also, in this model, the input data are processed individually and sequentially instead of a complete set; Therefore, in LSTM, understanding and processing the complexity of relationships between words and meanings is limited.

Transformer model

In response to this issue, in 2017, a team from Google Brain introduced a model called the transformer model. Unlike LSTM, transformers can process all input data simultaneously.

Transformers also use a mechanism called Self-Attention. The self-awareness mechanism measures the relevance of the components of a set of data in order to gain a more accurate impression of the whole set.

Therefore, with the help of this mechanism, transformers can examine the different components of the sentence and phrase more accurately and understand their relationship. This feature enables transformers to better understand data sets and process much larger data sets.

Mechanism of self-attention in GPT

OpenAI has developed ChatGPT. ChatGPT is not the only artificial intelligence and chatbot model of this company; Because since 2018, the company has developed prototypes called Producer Pre-Trained Transformer (GPT) models.

The first model was named GPT-1, and its next improved versions were released in 2019 and 2020 with the names GPT-2 and GPT-3. Recently and in 2022, we have seen the unveiling of its newest models, namely InstructGPT and ChatGPT.

While the change from GPT-1 to GPT-2 was not much of a technological leap, GPT-3 saw major changes. The improvements in computational efficiency enabled GPT-3 to train on much more data than GPT-2 and have a more diverse knowledge base. Therefore, in the third version, GPT was able to perform various tasks.

All GPT models use a transformer architecture and have an encoder to process the input data sequence and a decoder to produce the output sequence data.

Both encoder and decoder use the multi-head self-attention mechanism that allows the model to examine and analyze different parts of the sequence. To do this, the self-attention mechanism converts tokens (pieces of text that can include sentences or words or other groups of text) into vectors that show the importance of the token in the phrase.

Encoder also uses Masked Language Modeling to understand the relationship between words and provide better answers. In addition to these features, the multi-head self-awareness mechanism used in GPT repeats the process several times instead of checking the importance of words once, which makes the model able to understand sub-concepts and more complex relationships of the input data.

Problems and limitations of GPT-3

Although GPT-3 brought significant improvements in natural language processing (languages used for humans), problems and limitations were also seen in this advanced version. For example, GPT-3 has difficulty understanding users’ instructions correctly and can’t help them as it should. In addition, GPT-3 publishes incorrect or non-existent information and data.

Another important point is that the mentioned model cannot provide proper explanations about its performance and users do not know how GPT-3 made conclusions and decisions. The third version also does not have proper filters and may publish offensive or hurtful content. These are the problems that OpenAI tried to fix in the next versions.

ChatGPT and its formation stages

In order to solve the problems of GPT-3 and improve the overall performance of standard LLMs, OpenAI introduced the InstructGPT language model, which later became ChatGPT.

InstructGPT has seen huge improvements compared to previous OpenAI models, and its new approach to using human feedback in the training process has resulted in much better outputs. This teaching method is called reinforcement learning model of human feedback (RLHF), which plays an important role in understanding the goals and expectations of humans when answering questions.

The creation of this educational model and the development of ChatGPT by OpenAI includes three general steps, which we will explain below.

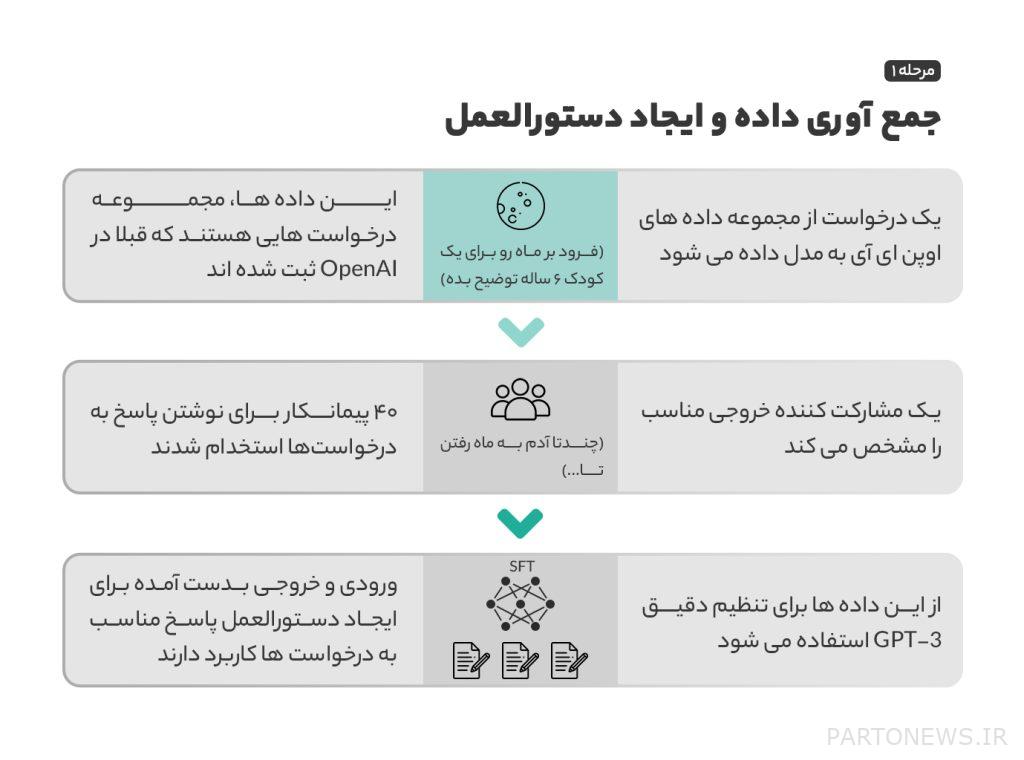

Step 1: Supervised Fine Tuning (SFT) Model

In the early stages of development, to improve GPT-3, OpenAI hired forty contractors to create supervised training datasets for model learning. These input data and requests were collected from real users and data registered in OpenAI. With this data set, GPT-3.5, also called SFT model, was further developed.

The OpenAI team tried to maximize the diversity of the data set, and all data containing personally identifiable information was also removed from this data. After collecting requests and data, OpenAI asked participants to identify and categorize how users request and ask questions. As a result of this investigation, three main ways of requesting information were identified:

- Requests that are asked directly; For example, “Explain to me about a topic”.

- Fuschat requests that are more complex; For example, “based on the two sample stories I sent, write a new story with the same topic.”

- Continuing requests that a matter be continued; For example, “finish this story according to the introduction”.

Finally, collecting the commands registered in the OpenAI database and handwritten by the participants led to the creation of 13 thousand input and output samples for use in the model.

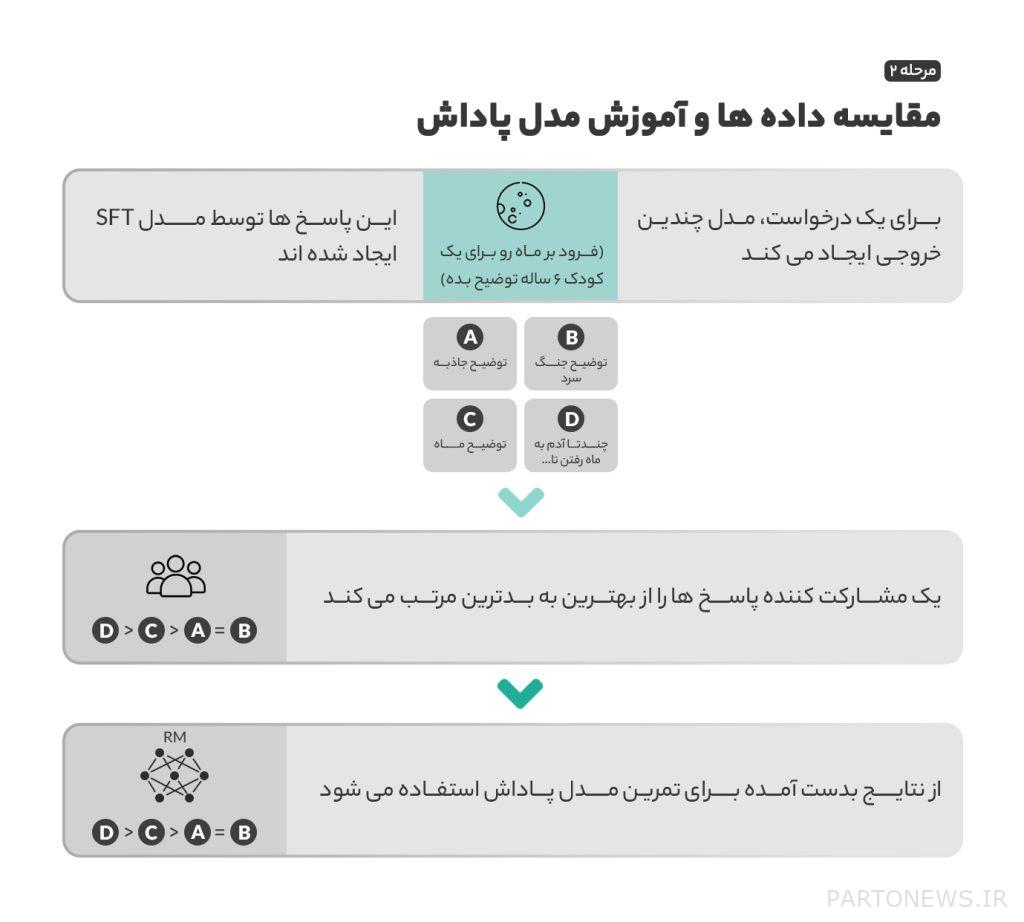

Step 2: Reward model

After training SFT in the first step, the model has the ability to provide more appropriate responses to user requests. However, this model was still incomplete and needed to be improved; The improvement made possible by the reward model and reinforcement learning.

In this method, the model tries to find the best result in different situations and show the best performance. In reinforcement learning, the model is rewarded for making appropriate choices and performance, and is penalized for making inappropriate choices and performance. At this stage, through rewards and penalties, SFT learns to generate the best outputs based on the input data.

To use reinforcement learning, we need a reward model to determine which outputs are rewarded and which responses are penalized. To train the reward model, OpenAI provided participants with 4 to 9 outputs of the SFT model for each input data and asked them to rank these outputs from best to worst. With this scoring, a way was created to measure SFT’s performance and continuously improve.

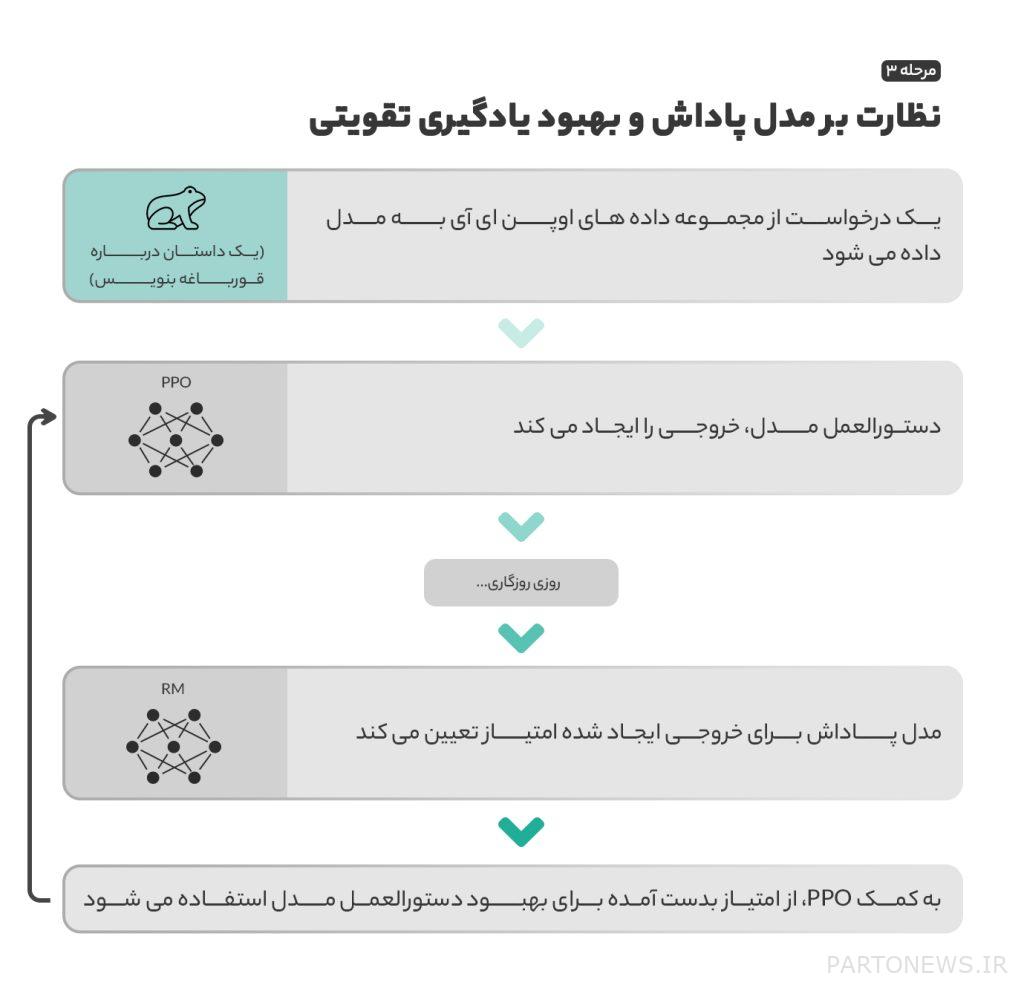

Step 3: Reinforcement learning model

After creating the reward model, in the third step, random inputs were given to the model to try to create outputs with the most rewards and points. Based on the reward model developed in the second step, requests and rewards are reviewed and ranked, and then the obtained results are returned to the model to improve performance.

The method used to update the model’s performance as each response is generated is called Proximal Policy Optimization (PPO), which was developed in 2017 by OpenAI co-founder John Schulman and his team.

PPO also has a KL (KL) penalty which is very important in this model. In reinforcement learning, the model can sometimes learn to manipulate its reward system to achieve a desired outcome. This leads the model to generate some patterns that do not have a good output despite having a higher score.

To solve this problem, KL penalty is used. This feature makes it not a criterion for creating an output with only more points and there is not much difference with the output created by SFT in the first step.

Model evaluation

After completing the main steps of building and training the model, a series of tests are performed during training to determine whether the new model performs better than the previous model. This evaluation consists of three parts.

First, the overall performance and ability of the model to check and follow the user’s instructions are checked. According to the results of the experiments, the participants preferred the outputs of InstructGPT to GPT-3 almost 85% of the time.

The new model was more capable of providing information, and with the help of PPO, more correct and accurate information was seen in the outputs. Finally, InstructGPT’s ability to publish or prevent inappropriate, derogatory, and hurtful content was also investigated.

Surveys showed that the new model can greatly reduce inappropriate content. Interestingly, when the model was asked to intentionally emit inappropriate responses, the outputs were significantly more offensive than the GPT-3 model.

By the end of the evaluation phase, InstructGPT recorded great improvements and demonstrated its performance on the popular ChatGPT chatbot. If you have more questions about how ChatGPT is developed and works, you can Official article Read published by OpenAI.